The Impact of US Data Privacy Regulations on Machine Learning Training

New data privacy regulations in the US significantly impact machine learning model training, requiring adherence to specific guidelines to protect user data and ensure compliance.

The landscape of machine learning is rapidly evolving, not only in terms of algorithms and computational power but also in response to stricter data privacy regulations. The impact of new US data privacy regulations on machine learning model training is profound, affecting how data is collected, processed, and used.

Understanding the Evolving US Data Privacy Landscape

Data privacy has become a central concern in the digital age, leading to the enactment of various regulations designed to protect individuals’ personal information. In the US, this has resulted in a complex and evolving legal landscape.

These laws directly influence machine learning practices, particularly concerning the training of models. Let’s delve into the key aspects of this legal framework.

Key US Data Privacy Regulations

Several key regulations are shaping the data privacy landscape in the US. Understanding these is crucial for anyone involved in machine learning.

- California Consumer Privacy Act (CCPA): Grants consumers rights over their personal data, including the right to know, the right to delete, and the right to opt-out of the sale of their data.

- California Privacy Rights Act (CPRA): Amends and expands upon the CCPA, introducing new rights and establishing the California Privacy Protection Agency (CPPA) to enforce the law.

- Virginia Consumer Data Protection Act (CDPA): Provides consumers with rights similar to the CCPA, including the right to access, correct, and delete their personal data.

- Other State Laws: Various other states are enacting their own data privacy laws, creating a patchwork of regulations across the country.

These regulations require organizations to implement robust data governance practices, including obtaining consent, providing transparency, and ensuring data security.

In summary, the evolving US data privacy landscape necessitates a proactive and comprehensive approach to compliance, impacting machine learning model training through stricter data governance practices.

How Data Privacy Laws Impact Machine Learning Model Training

The impact of data privacy laws on machine learning model training is significant. These laws impose restrictions on the collection, processing, and use of personal data, requiring developers to adopt new techniques and strategies.

Compliance with these regulations can be complex and requires a deep understanding of both the legal requirements and the technical aspects of machine learning.

Data Minimization and Purpose Limitation

Data minimization requires organizations to collect only the data that is necessary for a specific purpose. Purpose limitation restricts the use of data to the purpose for which it was collected.

- Data Collection: Limit the collection of personal data to only what is strictly necessary for training the model.

- Data Usage: Ensure that the data is used only for the purpose for which it was collected and with appropriate consent.

- Data Retention: Retain data only for as long as necessary and securely dispose of it when it is no longer needed.

By adhering to these principles, organizations can minimize the risk of data breaches and comply with data privacy regulations.

In essence, data minimization and purpose limitation are vital strategies for aligning machine learning model training with data privacy laws, reducing risk and ensuring responsible data handling.

Strategies for Privacy-Preserving Machine Learning

Given the constraints imposed by data privacy regulations, several strategies have emerged to enable privacy-preserving machine learning. These techniques allow models to be trained without directly accessing sensitive personal data.

By implementing these strategies, organizations can develop machine learning models that are both accurate and compliant with data privacy laws.

Differential Privacy

Differential privacy is a technique that adds noise to data to protect the privacy of individuals. This noise ensures that the presence or absence of any single individual in the dataset does not significantly affect the outcome of the analysis.

- Adding Noise: Introduce random noise to the data to obscure individual records.

- Privacy Budget: Control the amount of noise added to the data to balance privacy and utility.

- Mathematical Guarantees: Provide mathematical guarantees of privacy protection.

Differential privacy can be a powerful tool for protecting privacy while still enabling useful machine learning models.

In short, differential privacy offers a robust method for protecting individual privacy in machine learning by adding controlled noise to datasets, ensuring reliable privacy guarantees.

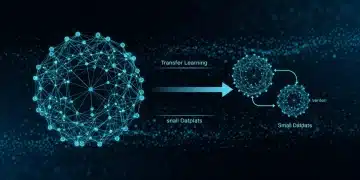

Federated Learning: A Decentralized Approach

Federated learning is a decentralized approach to machine learning that allows models to be trained on distributed devices without sharing the raw data. This is particularly useful when data is sensitive or cannot be moved due to regulatory constraints.

By training models locally on individual devices and then aggregating the results, federated learning allows organizations to leverage the power of machine learning without compromising privacy.

How Federated Learning Works

Federated learning involves training models locally on individual devices and then aggregating the results to create a global model.

- Local Training: Models are trained on individual devices using local data.

- Aggregation: The updates from each device are aggregated to create a global model.

- Deployment: The global model is deployed and used for predictions.

This approach allows organizations to train models on large datasets without directly accessing the data, ensuring privacy and compliance.

Federated learning provides a groundbreaking solution for training machine learning models on decentralized data, ensuring privacy and compliance by training models locally and aggregating results.

The Role of Anonymization and Pseudonymization

Anonymization and pseudonymization are techniques used to protect the privacy of individuals by removing or replacing identifying information in the data.

While these techniques can be effective, it is important to understand their limitations and to implement them carefully to ensure that the data is truly anonymized or pseudonymized.

Anonymization vs. Pseudonymization

Anonymization involves removing all identifying information from the data, making it impossible to re-identify individuals. Pseudonymization involves replacing identifying information with pseudonyms, allowing the data to be used for research or analysis while protecting the identity of individuals.

- Anonymization: Removes all identifying information, making re-identification impossible.

- Pseudonymization: Replaces identifying information with pseudonyms, allowing data to be used while protecting identity.

- Limitations: Both techniques have limitations and require careful implementation to be effective.

Anonymization and pseudonymization play a crucial role in protecting individual’s privacy by altering the data, but require diligent implementation to ensure their effectiveness.

Building a Culture of Data Privacy within Organizations

Compliance with data privacy regulations requires more than just implementing technical solutions. It requires building a culture of data privacy within the organization.

This includes training employees on data privacy principles, implementing robust data governance policies, and regularly auditing data practices to ensure compliance.

Key Steps to Building a Data Privacy Culture

Building a data privacy culture involves several key steps, including training employees, implementing policies, and conducting audits.

- Training: Provide regular training to employees on data privacy principles and best practices.

- Policies: Implement robust data governance policies that outline how data is collected, processed, and used.

- Audits: Conduct regular audits of data practices to ensure compliance with data privacy regulations.

- Leadership: Foster a data privacy-conscious environment with strong leadership.

Creating a culture of data privacy requires ongoing effort and commitment from all levels of the organization.

In conclusion, building a culture of data privacy within an organization involves comprehensive training, robust policies, regular audits, and strong leadership, ensuring sustainable compliance and ethical data handling.

| Key Concept | Brief Description |

|---|---|

| 🛡️ Data Minimization | Collect only necessary data for specific purposes. |

| 🔒 Differential Privacy | Add noise to data to protect individual privacy. |

| 🌐 Federated Learning | Train models on distributed devices without sharing raw data. |

| 🥷 Anonymization | Remove identifying information from data. |

Frequently Asked Questions

▼

The California Consumer Privacy Act (CCPA) grants consumers rights over their personal data. It affects machine learning by requiring data minimization and consent for data usage, impacting model training.

▼

Differential privacy adds noise to the data, ensuring that the presence or absence of any single individual does not significantly affect the outcome of the analysis, thus protecting user data.

▼

Federated learning trains models locally on individual devices. This approach enhances data privacy by avoiding the need to centralize sensitive data for training models.

▼

Key steps include training employees on privacy principles, implementing robust data governance policies, conducting regular audits, and fostering a privacy-conscious environment through strong leadership.

▼

Anonymization removes all identifying information, making re-identification impossible. Pseudonymization replaces identifying information with pseudonyms, allowing use while protecting identity.

Conclusion

The evolving landscape of US data privacy regulations presents significant challenges and opportunities for machine learning. By understanding and implementing privacy-preserving techniques, such as differential privacy, federated learning, and anonymization, organizations can develop machine learning models that are both accurate and compliant. Building a culture of data privacy within organizations is also essential.