Reduce ML Training Time by 30% with Transfer Learning in 2025

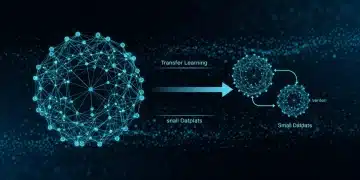

Transfer learning leverages pre-trained models to significantly reduce machine learning training time, potentially achieving a 30% reduction by 2025 by reusing learned features and adapting them to new, related tasks, optimizing resource utilization and accelerating model deployment.

Want to cut down your machine learning model training time? By 2025, how to reduce machine learning model training time by 30% using transfer learning will be a critical skill. Let’s explore efficient strategies for quicker, better models.

Understanding Transfer Learning for Faster Training

Transfer learning is a powerful technique that allows you to use knowledge gained from solving one problem and apply it to a different but related problem. This can significantly reduce the amount of data and computational resources required for training new machine learning models.

At its core, transfer learning focuses on leveraging pre-trained models. These models have already been trained on massive datasets and have learned valuable features that can be useful for various tasks. Let’s dive into how this approach saves time.

Benefits of Transfer Learning

There are several advantages to using transfer learning, especially regarding training time. By starting with a pre-trained model, you avoid the need to train a model from scratch. This dramatically reduces the computational load and the time required for training.

- Reduced training time: Pre-trained models provide a head start, saving significant training time.

- Lower data requirements: Less data is needed to fine-tune a pre-trained model compared to training from scratch.

- Improved model performance: Transfer learning can often lead to better model performance, especially when data is limited.

Moreover, transfer learning can be optimized for specific hardware configurations, further enhancing its efficiency in reducing training time. Techniques such as model pruning and quantization can be applied to pre-trained models before transfer learning, making them even faster to train on new datasets.

In summary, transfer learning offers a compelling way to expedite machine learning model training, reduce data dependency, and improve overall model performance by leveraging existing knowledge.

Key Steps in Applying Transfer Learning

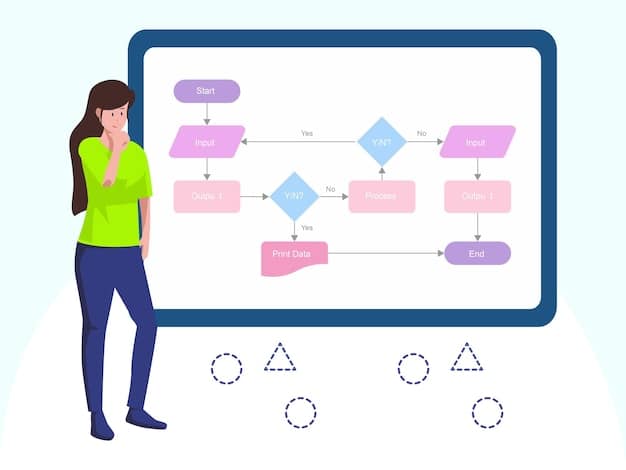

Now that we understand the benefits, let’s explore the key steps involved in applying transfer learning to reduce machine learning model training time. A structured approach is crucial for successful implementation.

The transfer learning process generally involves these steps: selecting a pre-trained model, adapting it to your specific task, and fine-tuning it with your data. Each of these steps can be optimized for the best results.

Model Selection

Choosing the right pre-trained model is crucial. Consider the similarity between the task the pre-trained model was trained on and your target task. Models trained on broad datasets like ImageNet are often a good starting point for image-related tasks.

Adaptation and Fine-Tuning

Once you’ve selected a model, you’ll need to adapt it to your specific task. This often involves replacing the final classification layer with a new one that matches the number of classes in your target dataset. Then, you fine-tune the model using your data.

Fine-tuning involves training the pre-trained model on your data. You can choose to fine-tune all layers of the model or freeze some of the earlier layers to preserve the pre-trained knowledge. This allows you to leverage the learned features while adapting the model to your specific task.

- Freeze layers: Retain pre-trained knowledge by freezing initial layers.

- Adjust learning rates: Use lower learning rates for fine-tuning to avoid overfitting.

- Data augmentation: Increase the diversity of your data through augmentations.

By carefully selecting, adapting, and fine-tuning pre-trained models, you can significantly reduce the time and resources required to train high-performing machine learning models.

Optimizing Data Utilization in Transfer Learning

One of the key advantages of transfer learning is the reduced need for extensive datasets. Proper data utilization techniques can further decrease training time and improve model accuracy.

Effective data utilization strategies in transfer learning revolve around minimizing the amount of data needed while maximizing the information extracted from it. These strategies often involve data augmentation, smart sampling, and active learning techniques.

Data Augmentation Techniques

Data augmentation involves creating new training examples by applying transformations to existing data. These transformations can include rotations, flips, crops, and color adjustments. Data augmentation can significantly increase the size and diversity of your training dataset, leading to improved model performance.

Smart Sampling Methods

Smart sampling methods focus on selecting the most informative examples from your dataset for training. This can be particularly useful when working with large datasets where it is not feasible to train on the entire dataset. Techniques like active learning can help identify the examples that will have the greatest impact on model performance.

Furthermore, techniques like synthetic data generation can supplement real-world data to overcome biases or scarcity, ensuring more robust and generalizable models. Balancing the dataset with synthetic samples can improve the model’s ability to handle under-represented classes.

- Active learning: Choose the most informative data points for training.

- Synthetic data: Generate artificial data to supplement real-world data.

- Balanced sampling: Ensure equal representation of all classes in the training data.

By optimizing data utilization through these strategies, you can achieve significant reductions in training time and improvements in model performance. This is particularly beneficial for tasks where data is limited or expensive to acquire.

Advanced Techniques for Accelerating Transfer Learning

Beyond the basic steps of transfer learning, several advanced techniques can further accelerate the model training process. These techniques often involve optimizations at both the model and training levels. Let’s explore:

Advanced techniques for accelerating transfer learning range from model compression methods to sophisticated optimization algorithms. These techniques can significantly speed up training while maintaining model accuracy.

Model Compression Techniques

Model compression techniques aim to reduce the size and complexity of machine learning models without sacrificing performance. Techniques like pruning and quantization can make models smaller and faster to train.

Optimization Algorithms

The choice of optimization algorithm can also have a significant impact on training time. Algorithms like Adam and RMSprop are often faster and more efficient than traditional gradient descent. Using adaptive learning rates can also help accelerate convergence.

Additionally, leveraging distributed training frameworks and hardware accelerators can provide dramatic speedups. Frameworks like TensorFlow and PyTorch are designed to efficiently utilize GPUs and TPUs, enabling faster training times for large models.

- Pruning: Remove unnecessary connections in the model.

- Quantization: Reduce the precision of weights and activations.

- Distributed training: Train models on multiple GPUs or TPUs.

By leveraging these advanced techniques, you can significantly reduce the training time of your machine learning models while maintaining or even improving their performance. These methods are especially valuable for large and complex models.

Hardware and Software Considerations for Transfer Learning in 2025

In 2025, hardware and software advancements will continue to play a crucial role in optimizing transfer learning. Understanding these considerations can help you leverage the latest technology for the best results.

Hardware and software advancements are continually evolving, offering new opportunities to optimize transfer learning. Staying up-to-date with the latest technologies and tools can significantly impact training efficiency.

Hardware Acceleration

Hardware accelerators like GPUs and TPUs will become even more powerful and accessible in 2025. These accelerators can significantly speed up the training process, especially for deep learning models. Cloud platforms like AWS, Google Cloud, and Azure will offer a wide range of hardware options for transfer learning.

Software Frameworks

Software frameworks like TensorFlow, PyTorch, and JAX will continue to evolve, offering new features and optimizations for transfer learning. These frameworks provide high-level APIs and tools for building, training, and deploying machine learning models. They also support distributed training, allowing you to train models on multiple devices.

Moreover, specialized software tools and libraries will emerge to streamline the transfer learning workflow, including automated hyperparameter tuning services and model management platforms. These tools will enable data scientists and engineers to focus on model design and data preparation rather than infrastructure management.

- Cloud platforms: Utilize cloud-based hardware for accelerated training.

- Framework updates: Stay updated with the latest software improvements.

- Specialized tools: Employ automated hyperparameter tuning services.

By considering these hardware and software advancements, you can optimize your transfer learning workflow and achieve the best possible performance in 2025. Proper utilization of these resources is key to maximizing efficiency.

Predicting the Future of Transfer Learning by 2025

Looking ahead to 2025, transfer learning will likely become even more integral to machine learning workflows. Several trends and developments are expected to shape the future of transfer learning and the machine learning landscape.

By 2025, the landscape of transfer learning is expected to evolve significantly, driven by advancements in algorithms, hardware, and data availability. Staying informed about these future trends is crucial for staying ahead in the field.

Automated Transfer Learning (AutoML)

Automated Machine Learning (AutoML) will play a key role in simplifying and accelerating transfer learning. AutoML tools can automatically select the best pre-trained models, optimize hyperparameters, and fine-tune models with minimal human intervention.

Self-Supervised Learning

Self-supervised learning techniques will enable models to learn from unlabeled data, further reducing the need for labeled datasets. This can significantly expand the applicability of transfer learning to new domains and tasks.

Furthermore, we can anticipate the development of more robust and explainable transfer learning methods, enabling better understanding and control over the transferred knowledge. This will be essential for deploying reliable and trustworthy AI systems.

- Explainable AI: Develop methods to understand and interpret transferred knowledge.

- Cross-domain transfer: Enable models to transfer knowledge across diverse domains.

- Lifelong learning: Create models that continuously learn and adapt over time.

By anticipating these future trends, you can position yourself to leverage the full potential of transfer learning and drive innovation in machine learning. Adaptability and a forward-thinking mindset will be essential.

| Key Point | Brief Description |

|---|---|

| 🚀 Faster Training | Leverages pre-trained models to reduce training time. |

| 📊 Less Data Needed | Fine-tuning requires less data than training from scratch. |

| 🧠 Better Performance | Improves model accuracy, especially with limited data. |

| ⚙️ Hardware Boost | GPUs/TPUs accelerate transfer learning in 2025. |

FAQ

▼

Transfer learning is a machine learning technique where a model trained on one task is reused as the starting point for a model on a second task. It’s particularly useful when the second task has limited data.

▼

It can significantly reduce training time, potentially by 30% or more, as models don’t need to learn from scratch. Pre-trained features accelerate the convergence process on new datasets.

▼

Choose a pre-trained model relevant to your task. Models trained on large, diverse datasets like ImageNet are often good starting points for image-related tasks due to their generic feature extraction capabilities.

▼

Yes, especially when you have limited data. Pre-trained models provide valuable initial knowledge, leading to better generalization and performance compared to training a model from scratch on a small dataset.

▼

Challenges include negative transfer (where pre-trained knowledge hurts performance), selecting the right pre-trained model, and fine-tuning hyperparameters appropriately to balance pre-trained and new knowledge.

Conclusion

Transfer learning offers a powerful pathway to expedite machine learning model training, reduce data requirements, and improve overall performance. By 2025, mastering these techniques will be essential for anyone looking to stay competitive in the field.