Machine Learning Model Deployment: US Cloud Platform Comparison

Machine Learning Model Deployment Strategies: A Comparison of Cloud Platforms in the US Market examines the various cloud platforms available in the US for deploying machine learning models, highlighting their strengths, weaknesses, and ideal use cases.

The efficient deployment of machine learning models is crucial for businesses looking to leverage AI. This article delves into machine learning model deployment strategies: a comparison of cloud platforms in the US market, providing valuable insights for choosing the right platform.

Understanding Machine Learning Model Deployment

Machine learning model deployment is the process of integrating a trained machine learning model into an existing production environment. This allows businesses to use the model to make predictions and automate tasks.

Effective deployment strategies are essential for ensuring that models perform optimally and deliver the expected business value.

Key Stages in Model Deployment

The deployment process involves several key stages, each requiring careful planning and execution.

- Model Selection: Choosing the right model for the specific business problem.

- Environment Setup: Configuring the deployment environment, including hardware and software.

- Testing and Validation: Ensuring the model performs as expected in a production setting.

- Monitoring and Maintenance: Continuously monitoring the model’s performance and making necessary adjustments.

Different cloud platforms offer various tools and services to streamline these stages, making the deployment process more efficient.

Overview of Leading Cloud Platforms for Machine Learning

The US market offers a variety of cloud platforms that cater to machine learning model deployment. Each platform has its unique strengths and weaknesses.

Understanding these platforms can help businesses make informed decisions about which one best suits their needs.

Amazon Web Services (AWS)

AWS provides a comprehensive suite of services for machine learning, including SageMaker, which simplifies the deployment process.

SageMaker offers tools for building, training, and deploying machine learning models with ease.

Google Cloud Platform (GCP)

GCP offers AI Platform, a managed service that allows users to deploy and manage machine learning models.

AI Platform provides tools for model training, prediction, and deployment, making it a versatile option.

Microsoft Azure

Azure Machine Learning provides a collaborative, cloud-based environment for data scientists to build, train, and deploy models.

Azure offers integration with other Azure services, providing a cohesive ecosystem for machine learning workflows.

Each platform offers different pricing models, compute resources, and integration capabilities. Businesses should evaluate these factors to determine the best fit for their specific requirements.

Comparing AWS, GCP, and Azure for Model Deployment

When comparing AWS, GCP, and Azure for machine learning model deployment, several factors come into play. This includes ease of use, scalability, pricing, and integration capabilities.

Understanding these differences can help businesses make a more informed decision.

Ease of Use

AWS SageMaker offers a user-friendly interface and a wide range of pre-built algorithms, making it easier for beginners.

GCP’s AI Platform is known for its flexibility and support for custom models, which may require more expertise.

Azure Machine Learning provides a balanced approach, with both user-friendly tools and advanced customization options.

Scalability

All three platforms offer excellent scalability, allowing businesses to handle large volumes of data and traffic.

AWS’s auto-scaling capabilities are highly regarded, providing seamless scaling as needed.

GCP’s Kubernetes-based infrastructure offers robust scalability and container management.

Azure’s scalability is tightly integrated with other Azure services, providing a cohesive scaling solution.

Cost Considerations for Cloud-Based Deployment

Cost is a critical factor when choosing a cloud platform for machine learning model deployment. Each platform has its pricing model.

Understanding these costs is crucial for budgeting and optimizing expenses.

Pricing Models

AWS offers a pay-as-you-go pricing model, where users only pay for the resources they use.

GCP also uses a pay-as-you-go model, with sustained use discounts for long-term usage.

Azure offers a combination of pay-as-you-go and reserved instance pricing, allowing users to optimize costs based on their usage patterns.

Factors Influencing Costs

Several factors can influence the cost of cloud-based deployment, including compute resources, storage, and network bandwidth.

- Compute Resources: The type and number of virtual machines or containers used for deployment.

- Storage: The amount of storage required for training data and model artifacts.

- Network Bandwidth: The amount of data transferred between the cloud platform and external sources.

Businesses should carefully evaluate these factors to estimate their deployment costs accurately.

Best Practices for Efficient Model Deployment

Efficient model deployment requires careful planning and adherence to best practices. These practices can help businesses optimize performance, reduce costs and ensure reliability.

Implementing these strategies can significantly improve the overall deployment process.

Containerization

Containerization, using technologies like Docker, allows for consistent and reproducible deployments across different environments.

Containers encapsulate the model and its dependencies, ensuring that it runs reliably regardless of the underlying infrastructure.

Continuous Integration and Continuous Deployment (CI/CD)

Implementing CI/CD pipelines automates the deployment process, reducing the risk of errors and improving efficiency.

CI/CD pipelines enable rapid iteration and deployment of new model versions.

Monitoring and Alerting

Continuous monitoring of model performance is essential for identifying and addressing potential issues.

Setting up alerts for key metrics allows for proactive intervention and ensures that the model continues to perform optimally.

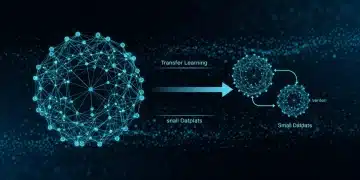

Future Trends in Machine Learning Model Deployment

The field of machine learning model deployment is constantly evolving, with new trends and technologies emerging regularly. Staying abreast of these trends can help businesses stay competitive.

Understanding these future developments can provide a strategic advantage.

Edge Computing

Edge computing involves deploying models closer to the data source, reducing latency and improving performance.

Edge deployment is particularly useful for applications that require real-time decision-making, such as autonomous vehicles and IoT devices.

Serverless Deployment

Serverless deployment allows businesses to deploy models without managing servers, reducing operational overhead.

Serverless platforms like AWS Lambda and Azure Functions provide a scalable and cost-effective way to deploy machine learning models.

Explainable AI (XAI)

Explainable AI focuses on making machine learning models more transparent and interpretable.

XAI is becoming increasingly important as businesses need to understand why models are making certain predictions, especially in regulated industries.

| Key Point | Brief Description |

|---|---|

| 🚀 Cloud Platforms | AWS, GCP, and Azure are popular choices for deploying machine learning models, each with unique features. |

| 💰 Cost Considerations | Pricing models vary among platforms, impacting overall deployment expenses. |

| ⚙️ Best Practices | Containerization, CI/CD, and monitoring are crucial for efficient deployment and optimal performance. |

| 🔮 Future Trends | Edge computing, serverless deployment, and explainable AI are shaping the future of model deployment. |

Frequently Asked Questions

Machine learning model deployment is the process of integrating a trained machine learning model into a production environment, allowing it to make predictions on real-world data.

The best platform depends on specific needs. AWS offers a comprehensive suite, GCP provides flexibility, and Azure integrates well with other Microsoft services.

Optimize compute resources, use reserved instances, leverage spot instances, and monitor usage to minimize expenses and improve resource allocation efficiency.

Containerization ensures consistent deployments across environments by encapsulating the model and its dependencies. It also improves portability and scalability.

Explainable AI makes machine learning models more transparent and interpretable, allowing users to understand why models make specific predictions. It’s crucial for trust and compliance.

Conclusion

Choosing the right machine learning model deployment strategies: a comparison of cloud platforms in the US market can significantly impact the success of AI initiatives. By carefully evaluating the features, costs, and best practices associated with each platform, businesses can ensure efficient, scalable, and cost-effective deployment of their machine learning models, ultimately driving greater value and innovation.