Ethical AI in Business: A US Guide to Responsible Adoption

The Ethical Implications of AI in Business: Navigating Responsible AI Adoption in the US involve addressing biases, ensuring transparency, and adhering to regulatory frameworks to build trust and mitigate potential harm in AI implementations.

The integration of Artificial Intelligence (AI) into business operations is no longer a futuristic concept but a current reality. As US companies increasingly adopt AI technologies, understanding the ethical implications of AI in business: navigating responsible AI adoption in the US becomes paramount. This guide provides a comprehensive overview of the ethical considerations businesses must address to ensure responsible AI implementation.

Understanding the Rise of AI in US Business

The United States is at the forefront of AI adoption, with businesses across various sectors recognizing its potential to drive innovation, increase efficiency, and improve decision-making. From automating routine tasks to providing sophisticated data analytics, AI is transforming how businesses operate.

This rapid adoption, however, brings several ethical challenges to the forefront. As AI systems become more complex and autonomous, it’s crucial to ensure they align with societal values and ethical principles. This includes addressing potential biases in algorithms, ensuring transparency in AI decision-making, and maintaining accountability for AI outcomes.

Key Drivers of AI Adoption in the US

- Increased Efficiency: AI automates tasks, reducing operational costs and increasing productivity.

- Data-Driven Insights: AI provides powerful analytics, enabling better-informed business decisions.

- Enhanced Customer Experience: AI-powered chatbots and personalized services improve customer satisfaction.

The shift also presents an opportunity to reshape the American workforce. While some jobs may be automated, AI also creates new roles in areas like data science, AI development, and AI ethics. Businesses have a responsibility to invest in workforce training and upskilling to prepare their employees for these changes.

In conclusion, the rise of AI in US business is creating significant opportunities and challenges. Understanding the ethical implications of AI is crucial for businesses seeking to harness its power responsibly and sustainably.

Identifying Ethical Challenges in AI Implementation

Implementing AI in business isn’t without its challenges, especially when it comes to ethics. AI systems can inadvertently perpetuate biases, compromise privacy, and make decisions that lack transparency. Understanding these challenges is the first step towards adopting responsible AI practices.

One of the most significant ethical concerns is algorithmic bias. AI systems are trained on data, and if that data reflects existing societal biases, the AI will amplify those biases in its decision-making. This can lead to discriminatory outcomes in areas like hiring, lending, and criminal justice.

Common Ethical Pitfalls in AI

- Algorithmic Bias: AI systems amplifying existing societal biases.

- Lack of Transparency: Difficulty understanding how AI systems arrive at their decisions.

- Privacy Concerns: AI systems collecting and processing vast amounts of personal data.

Another critical concern is the so-called “black box” problem. Many AI systems, particularly deep learning models, are so complex that it’s difficult to understand how they arrive at their decisions. This lack of transparency can make it challenging to identify and correct biases or errors, and can erode trust in AI systems.

Addressing these ethical challenges requires a multi-faceted approach, including careful data curation, rigorous testing for bias, and a commitment to transparency and accountability. Businesses must also consider the broader societal implications of their AI deployments, ensuring they align with ethical principles and human values.

In summary, identifying and addressing ethical challenges in AI implementation is essential for businesses to build trust, mitigate risks, and ensure that AI benefits society as a whole.

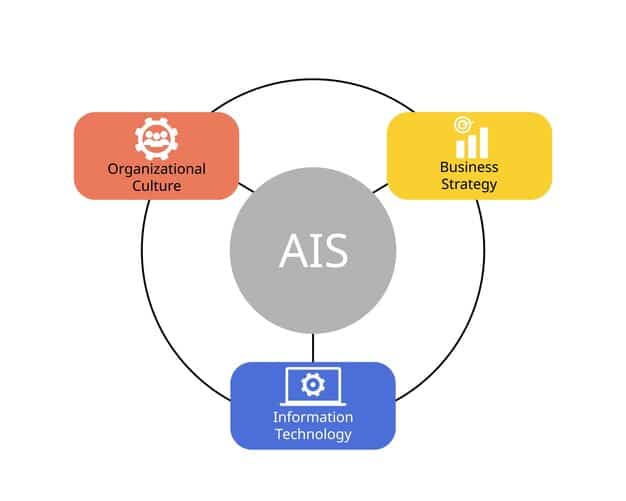

Establishing a Framework for Responsible AI Adoption

To navigate the ethical complexities of AI, businesses need to establish a robust framework for responsible AI adoption. This framework should encompass ethical guidelines, governance structures, and practical tools to ensure that AI systems are developed and deployed in a manner that is fair, transparent, and accountable.

A key component of this framework is an ethical AI policy that outlines the principles and values that guide AI development and deployment. This policy should address issues like bias, transparency, privacy, and human oversight. It should also establish clear lines of responsibility and accountability for AI outcomes.

Key Elements of a Responsible AI Framework

- Ethical AI Policy: A clear statement of ethical principles and values.

- Governance Structures: Teams responsible for overseeing AI ethics and compliance.

- Bias Detection and Mitigation: Processes for identifying and correcting bias in AI systems.

Another essential element is establishing governance structures to oversee AI ethics and compliance. This may involve creating an AI ethics committee or appointing individuals responsible for ensuring AI systems comply with ethical guidelines and regulations.

Implementing a responsible AI framework also requires practical tools and processes for detecting and mitigating bias, ensuring transparency, and protecting privacy. This may involve using explainable AI (XAI) techniques to understand how AI systems make decisions or implementing privacy-enhancing technologies to protect personal data.

In conclusion, establishing a comprehensive framework for responsible AI adoption is critical for businesses seeking to harness the power of AI ethically and sustainably. This framework should encompass ethical guidelines, governance structures, and practical tools to ensure AI systems are developed and deployed in a manner that aligns with societal values and ethical principles.

Ensuring Fairness and Mitigating Bias in AI Systems

Ensuring fairness and mitigating bias in AI systems is a critical ethical imperative. Algorithmic bias can lead to discriminatory outcomes, perpetuating inequalities and eroding trust in AI. Businesses must take proactive steps to identify and correct bias in their AI systems.

One of the most effective strategies for mitigating bias is careful data curation. This involves scrutinizing the data used to train AI systems, identifying potential sources of bias, and taking steps to correct or mitigate those biases. This may involve collecting more diverse and representative data, or using techniques like data augmentation to balance the dataset.

Strategies for Mitigating Bias in AI

- Data Curation: Scrutinizing and correcting bias in training data.

- Bias Detection Tools: Using tools to identify bias in AI systems.

- Fairness Metrics: Measuring fairness using metrics like equal opportunity and demographic parity.

Another valuable tool is the use of bias detection tools, which can help identify potential sources of bias in AI systems. These tools can analyze the AI’s decision-making process and flag areas where it may be unfairly disadvantaging certain groups.

Fairness metrics are also essential for quantifying and measuring fairness in AI systems. Metrics like equal opportunity and demographic parity can help businesses assess whether their AI systems are producing equitable outcomes across different demographic groups.

By prioritizing fairness and actively mitigating bias, businesses can ensure their AI systems are aligned with ethical principles and contribute to a more just and equitable society.

In conclusion, ensuring fairness and mitigating bias in AI systems is not just an ethical imperative, but also a business imperative. By proactively addressing bias, businesses can build trust, mitigate risks, and ensure that AI benefits all members of society.

Promoting Transparency and Explainability in AI

Transparency and explainability are essential for building trust in AI systems. When AI systems make decisions, it’s crucial to understand how they arrived at those decisions. This transparency allows for scrutiny, accountability, and the identification of potential biases or errors.

One of the most promising approaches to promoting transparency is the use of explainable AI (XAI) techniques. XAI aims to make AI decision-making more understandable to humans. This can involve providing explanations of why an AI system made a particular decision, or highlighting the factors that influenced the AI’s decision-making process.

Approaches to Promoting AI Transparency

- Explainable AI (XAI): Using techniques to make AI decision-making more understandable.

- Model Cards: Providing documentation about AI models, including their purpose, training data, and limitations.

- Auditing and Monitoring: Regularly reviewing AI systems to ensure they are functioning as intended.

Another valuable tool is the use of “model cards,” which provide documentation about AI models, including their purpose, training data, and limitations. Model cards can help users understand the context in which an AI model was developed and the potential biases or limitations it may have.

Regular auditing and monitoring are also essential for ensuring AI systems are functioning as intended and are not producing unintended consequences. This may involve reviewing the AI’s performance metrics, examining its decision-making process, and gathering feedback from users.

In conclusion, promoting transparency and explainability in AI is essential for building trust, ensuring accountability, and fostering responsible AI adoption. By using XAI techniques, providing model cards, and conducting regular audits, businesses can ensure their AI systems are understandable, transparent, and aligned with ethical principles.

Navigating Legal and Regulatory Frameworks for AI in the US

The legal and regulatory landscape for AI in the US is rapidly evolving. While there are currently no comprehensive federal laws specifically regulating AI, a number of existing laws and regulations may apply to AI systems, particularly in areas like data privacy, consumer protection, and employment discrimination.

Several federal agencies, including the Federal Trade Commission (FTC) and the Equal Employment Opportunity Commission (EEOC), have issued guidance on the use of AI, emphasizing the importance of fairness, transparency, and accountability. Some states and cities are also beginning to enact their own AI regulations, particularly in areas like automated decision-making and facial recognition.

Key Regulatory Considerations for AI in the US

- Data Privacy: Complying with data privacy laws like the California Consumer Privacy Act (CCPA).

- Consumer Protection: Ensuring AI systems do not engage in deceptive or unfair practices.

- Employment Discrimination: Avoiding discriminatory outcomes in hiring and employment decisions.

The European Union’s AI Act, which is expected to take effect in the coming years, may also have implications for US businesses that operate in the EU or that provide AI systems to EU customers. The AI Act establishes a risk-based framework for regulating AI, with the strictest requirements applying to AI systems that pose the highest risk to fundamental rights and safety.

Businesses must stay informed about the evolving legal and regulatory landscape for AI and ensure their AI systems comply with all applicable laws and regulations. This may involve consulting with legal experts, conducting compliance audits, and implementing robust data governance and privacy practices.

Conclusion

The Ethical Implications of AI in Business: Navigating Responsible AI Adoption in the US is an ongoing journey requiring a commitment to fairness, transparency, and accountability. By embracing ethical principles, businesses can harness the transformative potential of AI while mitigating the risks and ensuring it benefits society as a whole.

| Key Point | Brief Description |

|---|---|

| 💡 Algorithmic Bias | AI systems can amplify societal biases present in training data. |

| 🔒 Data Privacy | Protecting personal data is crucial for maintaining trust and complying with laws. |

| ⚖️ Transparency | Understanding how AI systems make decisions is vital for accountability. |

| 🛡️ Regulatory Compliance | Staying informed about evolving AI regulations is essential. |

FAQ

Algorithmic bias occurs when AI systems make unfair or discriminatory decisions due to biased training data. It can perpetuate societal inequalities and lead to unjust outcomes.

Data privacy is crucial in AI because AI systems often collect and process vast amounts of personal information. Protecting this data is essential for maintaining trust and complying with data protection laws.

Businesses can promote transparency by using explainable AI (XAI) techniques, providing model cards, and regularly auditing and monitoring their AI systems.

Key regulations include data privacy laws like CCPA, consumer protection laws enforced by the FTC, and employment discrimination laws enforced by the EEOC, all of which apply to AI systems.

Businesses can ensure fairness by carefully curating training data, using bias detection tools, and measuring fairness using metrics like equal opportunity and demographic parity.

Conclusion

Navigating the ethical implications of AI in business requires a proactive and thoughtful approach. By prioritizing fairness, transparency, and accountability, businesses can harness the power of AI for good, creating value for their stakeholders while upholding ethical principles.