Federated Learning 2026: Data Privacy in US Healthcare

By 2026, federated learning will be crucial for data privacy in US healthcare, enabling collaborative AI model training without compromising sensitive patient information across diverse institutions.

The landscape of healthcare in the United States is rapidly evolving, driven by an insatiable demand for advanced analytical capabilities and a parallel, non-negotiable need for stringent data privacy. In this intricate dance between innovation and security, federated learning in 2026: a comparison of 3 leading frameworks for data privacy in US healthcare emerges as a pivotal technology. This approach allows AI models to learn from decentralized datasets located at various healthcare institutions without the data ever leaving its source, fundamentally transforming how medical research and clinical applications leverage sensitive patient information.

The Imperative of Federated Learning in US Healthcare by 2026

By 2026, the convergence of increasing digital health records, sophisticated AI algorithms, and heightened regulatory scrutiny like HIPAA will make traditional centralized data aggregation models untenable for many healthcare applications. Federated learning offers a robust solution, allowing multiple healthcare providers to collaboratively train powerful machine learning models while keeping sensitive patient data securely within their own firewalls. This distributed paradigm is not merely an optimization; it is becoming a fundamental requirement for ethical and compliant AI development in the US healthcare sector.

The sheer volume and diversity of healthcare data, ranging from electronic health records (EHRs) to imaging scans and genomic sequences, present both immense opportunities and significant challenges. Centralizing such data is often impractical due to storage costs, bandwidth limitations, and, most critically, privacy concerns. Federated learning sidesteps these issues by bringing the computation to the data, rather than the data to the computation. This decentralized approach fosters collaboration among institutions that might otherwise be hesitant to share raw patient information.

Addressing Data Silos and Enhancing Model Robustness

One of the primary advantages of federated learning is its ability to overcome the problem of data silos. Healthcare institutions frequently operate independently, leading to datasets that are often fragmented and localized. This fragmentation can hinder the development of comprehensive and generalizable AI models. Federated learning allows models to learn from the aggregated knowledge across these silos, leading to more robust and accurate predictions without ever merging the underlying raw data.

- Data Diversity: Access to a wider range of patient demographics and conditions improves model generalizability.

- Reduced Bias: Training on diverse datasets can help mitigate algorithmic bias present in single-institution data.

- Scalability: Easily integrates new data sources without extensive data transfer infrastructure.

The regulatory landscape, particularly HIPAA, places stringent requirements on handling Protected Health Information (PHI). Federated learning, with its inherent privacy-preserving characteristics, aligns well with these regulations, providing a pathway for innovation that respects patient confidentiality. This makes it an indispensable tool for advancing AI in US healthcare.

In conclusion, federated learning is poised to become a cornerstone of AI innovation in US healthcare by 2026. Its ability to facilitate collaborative model training while rigorously protecting patient privacy directly addresses some of the most pressing challenges facing the industry, paving the way for more effective and ethically sound medical AI applications.

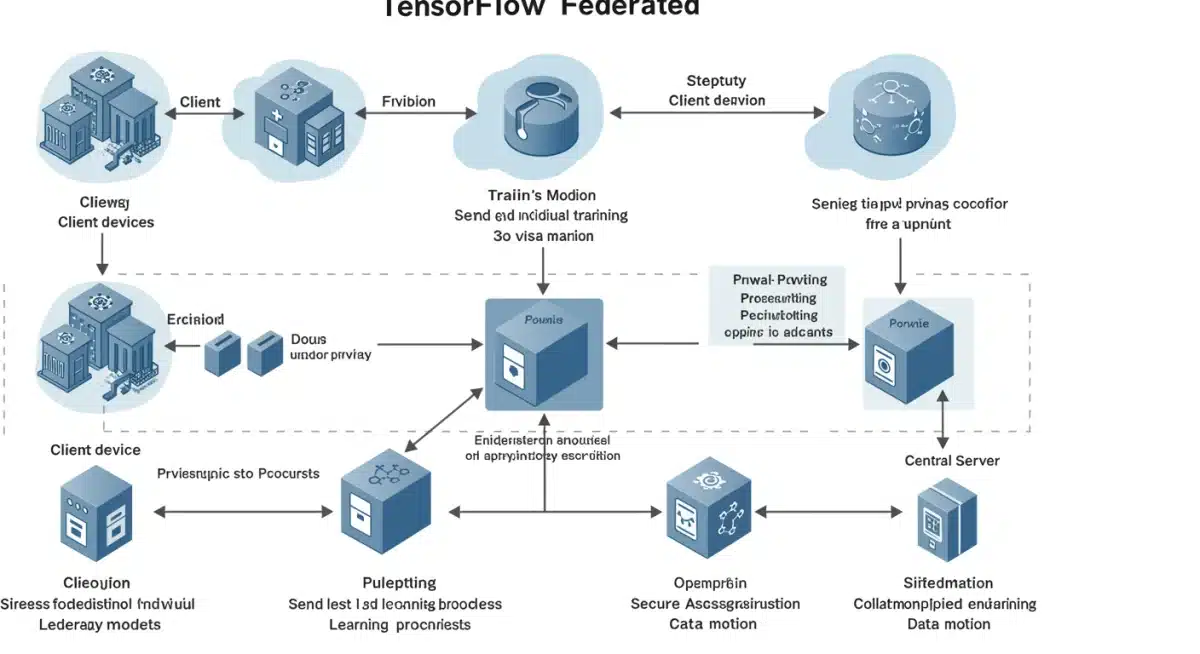

TensorFlow Federated (TFF): A Google-Backed Solution for Healthcare

TensorFlow Federated (TFF), developed by Google, stands out as a powerful open-source framework designed for implementing federated learning. Its robust architecture and extensive community support make it a strong contender for healthcare applications in 2026. TFF provides a high-level API to express federated computations, allowing researchers and developers to focus on model logic rather than the complexities of distributed systems and privacy protocols.

The framework’s design emphasizes modularity and flexibility, making it adaptable to various federated learning scenarios, from simple averaging to more complex algorithmic structures. Its roots in the widely adopted TensorFlow ecosystem also mean that developers can leverage existing knowledge and tools, accelerating adoption within healthcare research and development teams.

Key Features and Privacy Mechanisms

TFF incorporates several features crucial for sensitive healthcare data. It supports various aggregation algorithms, including secure aggregation, which adds noise to individual updates before combining them, further enhancing privacy. The framework also facilitates differential privacy, a technique that provides formal guarantees about the privacy of individual data points.

- Secure Aggregation: Combines model updates in a way that individual contributions are not revealed.

- Differential Privacy: Adds controlled noise to model parameters to protect individual data records.

- Flexible Abstractions: Allows for custom federated algorithms beyond standard federated averaging.

While TFF offers significant advantages, its complexity can be a learning curve for those new to federated learning or distributed systems. However, its comprehensive documentation and active community help mitigate this challenge. For US healthcare institutions, TFF represents a mature and well-supported option for developing privacy-preserving AI solutions, especially for tasks like disease prediction, diagnostic imaging analysis, and personalized treatment recommendations.

In essence, TensorFlow Federated provides a robust, scalable, and privacy-conscious foundation for developing cutting-edge AI in healthcare. Its integration of advanced privacy techniques and its open-source nature position it as a leading framework for addressing the unique data privacy demands of the US healthcare system by 2026.

PySyft: Democratizing Privacy-Preserving AI with PyTorch

PySyft, an open-source library built on PyTorch, aims to democratize privacy-preserving AI by making advanced techniques like federated learning and homomorphic encryption accessible to a wider audience. As a framework, PySyft is particularly attractive for US healthcare organizations already utilizing PyTorch for their machine learning initiatives, offering seamless integration and a familiar development environment.

Its philosophy centers on enabling secure, multi-party computation directly within existing deep learning workflows. This means that researchers and developers can continue to use their preferred PyTorch models and simply add PySyft’s capabilities to introduce privacy protection. This approach minimizes the overhead of adopting new tools and accelerates the deployment of privacy-focused AI in clinical and research settings.

Advanced Privacy Features and Interoperability

PySyft excels in offering a rich toolkit for privacy. Beyond federated learning, it integrates homomorphic encryption, which allows computations to be performed on encrypted data without decrypting it, providing an unparalleled level of privacy. It also supports secure multi-party computation (SMC), enabling multiple entities to jointly compute a function over their inputs while keeping those inputs private.

- Homomorphic Encryption: Compute on encrypted data, maintaining confidentiality throughout.

- Secure Multi-Party Computation (SMC): Collaboratively compute without revealing individual inputs.

- Differential Privacy: Adds statistical noise to protect individual data points during model training.

The interoperability of PySyft with the PyTorch ecosystem is a significant advantage for healthcare institutions. Many academic and research facilities in the US have adopted PyTorch for its flexibility and dynamic computational graph, making PySyft a natural extension for their privacy needs. Its focus on user-friendliness, combined with powerful privacy tools, makes it an attractive option for developing AI applications that adhere to strict healthcare privacy regulations.

PySyft’s commitment to making privacy-preserving AI accessible positions it as a vital framework for the US healthcare sector in 2026. By enabling secure collaboration and robust data protection, it empowers institutions to unlock the full potential of their data for medical advancements without compromising patient trust or regulatory compliance.

Intel’s OpenFL: Enterprise-Grade Federated Learning for Healthcare

Intel’s Open Federated Learning (OpenFL) is an open-source framework specifically designed for enterprise-grade federated learning. Backed by Intel, a major player in computing hardware, OpenFL brings a strong focus on performance, scalability, and security, making it a compelling option for large-scale healthcare systems and pharmaceutical companies in the US by 2026.

OpenFL emphasizes a robust, production-ready architecture, built to handle complex, real-world federated learning scenarios. Its design principles prioritize ease of deployment and management within existing IT infrastructures, a critical factor for healthcare organizations with stringent operational requirements. The framework supports a variety of machine learning tasks and models, providing flexibility for diverse clinical and research applications.

Performance, Security, and Deployment in Clinical Settings

A key differentiator for OpenFL is its optimization for Intel hardware, potentially offering performance benefits for organizations already invested in Intel-based computing infrastructure. It also provides strong security features, including robust authentication and authorization mechanisms, crucial for protecting sensitive patient data in the healthcare context.

- Hardware Optimization: Leverages Intel hardware for enhanced performance and efficiency.

- Robust Security: Features strong authentication and authorization for data protection.

- Scalable Architecture: Designed for large-scale deployments across numerous healthcare sites.

OpenFL’s enterprise focus means it is built with the operational realities of large organizations in mind. It offers clear mechanisms for managing federated experiments, tracking model progress, and ensuring compliance. This makes it particularly suitable for multi-institutional research collaborations or large hospital networks looking to deploy AI solutions across their entire ecosystem.

While still relatively newer compared to TFF, OpenFL’s backing by Intel and its focus on enterprise features make it a strong contender for US healthcare. Its emphasis on a production-ready environment and integrated security features are highly attractive for institutions that prioritize seamless deployment and robust data governance in their federated learning initiatives.

Comparative Analysis of Frameworks for US Healthcare (2026)

When evaluating TensorFlow Federated (TFF), PySyft, and OpenFL for US healthcare applications in 2026, several factors come into play, primarily revolving around ease of use, privacy features, and suitability for specific organizational needs. Each framework offers distinct advantages, making the choice dependent on the healthcare institution’s existing infrastructure, technical expertise, and specific project requirements.

TFF, with its Google backing and TensorFlow integration, offers a mature and well-documented platform, ideal for organizations already familiar with TensorFlow. Its strong support for secure aggregation and differential privacy makes it suitable for a wide range of privacy-sensitive tasks. PySyft, on the other hand, appeals to PyTorch users, providing advanced privacy techniques like homomorphic encryption with a focus on accessibility. OpenFL, from Intel, targets enterprise users, emphasizing performance, scalability, and robust security for large-scale deployments.

Choosing the Right Framework: Considerations for Healthcare Providers

The decision often boils down to a balance of factors. For a research institution heavily invested in TensorFlow, TFF might be the most straightforward path. If an organization prioritizes advanced cryptographic privacy and uses PyTorch, PySyft offers compelling features. For large hospital systems or pharmaceutical companies seeking an enterprise-grade solution with strong performance and security, OpenFL presents a strong case.

- Existing ML Ecosystem: Compatibility with TensorFlow or PyTorch is a major deciding factor.

- Privacy Requirements: Different levels of privacy guarantees (differential privacy, homomorphic encryption) offered.

- Deployment Scale: Suitability for small research projects versus large, multi-institutional deployments.

- Resource Availability: Technical expertise within the organization and community support for troubleshooting.

All three frameworks are actively developed and are expected to mature significantly by 2026, incorporating new privacy-preserving techniques and improving ease of use. The choice will increasingly depend on the specific nuances of a healthcare organization’s data governance policies, regulatory compliance needs, and long-term AI strategy. Understanding these trade-offs is crucial for successful implementation of federated learning in the highly regulated US healthcare environment.

Ultimately, the best framework is one that aligns with an organization’s strategic goals, technical capabilities, and most importantly, its commitment to patient data privacy and ethical AI development.

Challenges and Future Outlook for Federated Learning in US Healthcare

Despite its immense promise, the widespread adoption of federated learning in US healthcare by 2026 faces several significant challenges. These include overcoming technical complexities, addressing regulatory ambiguities, and fostering greater trust and collaboration among diverse healthcare stakeholders. The journey from proof-of-concept to widespread clinical deployment requires careful navigation of these hurdles.

Technical challenges often involve the heterogeneity of data across different institutions, which can impact model convergence and performance. Furthermore, the computational overhead of some privacy-preserving techniques, such as homomorphic encryption, can be substantial, requiring powerful hardware and optimized algorithms. Standardizing data formats and interoperability protocols will be crucial for seamless federated learning implementations.

Regulatory Landscape and Ethical Considerations

The regulatory landscape, while generally supportive of privacy, still needs to evolve to specifically address federated learning. Clearer guidelines on how federated learning complies with HIPAA and other state-specific privacy laws would significantly accelerate adoption. Ethical considerations, such as ensuring fairness and preventing bias in models trained on diverse datasets, also remain paramount.

- Data Heterogeneity: Managing variations in data quality, format, and distribution across sites.

- Computational Overhead: Balancing strong privacy with acceptable training times and resource usage.

- Regulatory Clarity: Developing specific guidelines for federated learning under HIPAA and other laws.

- Trust and Governance: Establishing clear agreements and governance models for multi-party collaboration.

Looking ahead to 2026 and beyond, the future of federated learning in US healthcare is bright, but contingent on addressing these challenges proactively. Continued research into more efficient privacy-preserving algorithms, coupled with collaborative efforts to develop industry standards and best practices, will be essential. The increasing demand for personalized medicine and data-driven insights, combined with an unwavering commitment to patient privacy, will continue to drive innovation in this field.

The maturation of these frameworks, alongside a clearer regulatory environment and stronger inter-institutional trust, will solidify federated learning’s role as a transformative technology in healthcare, enabling a new era of collaborative, privacy-preserving AI that ultimately benefits patients across the nation.

| Key Aspect | Description for US Healthcare 2026 |

|---|---|

| Data Privacy Imperative | Federated learning is crucial for HIPAA compliance and patient trust in AI model development. |

| Framework Comparison | TFF (TensorFlow), PySyft (PyTorch), and OpenFL (Enterprise) offer distinct strengths for varying needs. |

| Challenges Ahead | Heterogeneous data, computational overhead, and regulatory clarity remain key hurdles for widespread adoption. |

| Future Impact | Enables collaborative AI, fostering robust models for personalized medicine and diagnostics. |

Frequently Asked Questions About Federated Learning in Healthcare

Federated learning is a machine learning approach that trains AI models on decentralized datasets without directly sharing raw data. It’s crucial for US healthcare because it allows institutions to collaborate on AI development while strictly adhering to patient data privacy regulations like HIPAA, overcoming data silos.

TFF integrates with TensorFlow, offering mature privacy tools for general ML tasks. PySyft, built on PyTorch, emphasizes advanced cryptographic privacy (homomorphic encryption). OpenFL, from Intel, focuses on enterprise-grade performance and security for large-scale deployments, optimizing for Intel hardware.

While federated learning significantly enhances data privacy by keeping raw data localized, no system offers 100% absolute privacy. Frameworks integrate techniques like differential privacy and secure aggregation to provide strong, measurable privacy guarantees, making data breaches of individual records highly improbable.

Federated learning enables clinical researchers to train AI models on larger, more diverse patient populations across multiple institutions. This leads to more robust, generalizable models, reduces bias, and accelerates the discovery of new insights without the legal and logistical hurdles of centralizing sensitive patient data.

Key challenges include managing data heterogeneity across institutions, addressing the computational overhead of advanced privacy techniques, establishing clearer regulatory guidelines for federated learning, and building robust trust frameworks among collaborating healthcare organizations for effective governance.

Conclusion

The journey towards a more data-driven yet privacy-conscious US healthcare system is undeniably complex, but federated learning offers a compelling and increasingly essential pathway forward. By 2026, the frameworks discussed—TensorFlow Federated, PySyft, and Intel’s OpenFL—will play critical roles in shaping how AI is developed and deployed in medical contexts. Each brings unique strengths to the table, catering to different technical ecosystems and organizational needs, yet all share the common goal of enabling collaborative intelligence without compromising the sanctity of patient data. The continued evolution of these technologies, coupled with a concerted effort to address remaining technical and regulatory challenges, promises a future where AI can unlock unprecedented medical advancements, all while upholding the highest standards of privacy and trust in US healthcare.