Machine learning model optimization: unlock your models’ true potential

Machine learning model optimization involves fine-tuning parameters and applying best practices to enhance model performance, ensuring accurate predictions and efficient use of resources.

Machine learning model optimization is essential for achieving peak performance in your predictive models. Have you ever wondered how the right adjustments can lead to significant improvements? Let’s dive into the world of model optimization and discover the strategies that can make a difference.

Understanding machine learning model optimization

Understanding machine learning model optimization is crucial for enhancing model performance and achieving better results. In this section, we will explore the core concepts that underpin successful optimization strategies.

What is Model Optimization?

Model optimization involves fine-tuning model parameters to improve accuracy and efficiency. It’s a way to ensure that your machine learning model predicts outcomes with greater precision. Without effective optimization, even the best algorithms may falter.

Key Techniques

There are several techniques to optimize machine learning models:

- Hyperparameter tuning: Adjusting learning rates, batch sizes, and other settings.

- Feature selection: Choosing the most relevant features to enhance performance.

- Regularization: Preventing overfitting by adding penalties to complex models.

- Cross-validation: Ensuring that the model performs well on unseen data.

Each technique addresses common challenges that arise during model training. For instance, hyperparameter tuning can significantly impact a model’s ability to learn effectively from training data.

Moreover, understanding your dataset is vital. Quality data leads to superior models. Often, the data needs cleaning or transforming before feeding it into the model. Secure a solid understanding of machine learning model optimization to pave the way for developing high-performing solutions.

Regularly monitoring the model’s performance after optimization is also key. Make adjustments based on real-world results to continuously refine accuracy. This iterative process helps ensure that the model remains effective as new data comes in.

Key techniques for optimizing models

Key techniques for optimizing models play a vital role in enhancing the performance of machine learning systems. By applying the right methods, you can significantly improve your model’s accuracy and efficiency.

Hyperparameter Tuning

Hyperparameter tuning involves adjusting settings that govern the learning process. This includes factors like learning rate, batch size, and regularization strength. Finding the best combination can be essential for achieving better results from your model.

- Experiment with different learning rates to find the sweet spot for training.

- Utilize techniques like grid search or random search to explore various parameter settings.

- Employ automated tools like Bayesian optimization for a more efficient search.

Changing one parameter at a time can help isolate its effect. However, it’s important to track how each change impacts overall performance.

Feature Selection

Feature selection is crucial for improving model performance. It involves identifying the most relevant data features that contribute to accurate predictions. By reducing the number of features, you can simplify the model and reduce the risk of overfitting.

Consider techniques like backward elimination and forward selection. These strategies can help highlight significant features.

By focusing on the features that matter the most, your model can process data more efficiently, leading to faster training times and better outcomes.

Regularization Techniques

Regularization techniques are vital in preventing overfitting. These techniques add a penalty to the loss function, discouraging overly complex models. Techniques like L1 (Lasso) and L2 (Ridge) regularization help maintain a balance between model complexity and accuracy.

When applying regularization, monitor how it affects your model on validation sets. If performance improves, continue tuning the regularization parameters for optimal results.

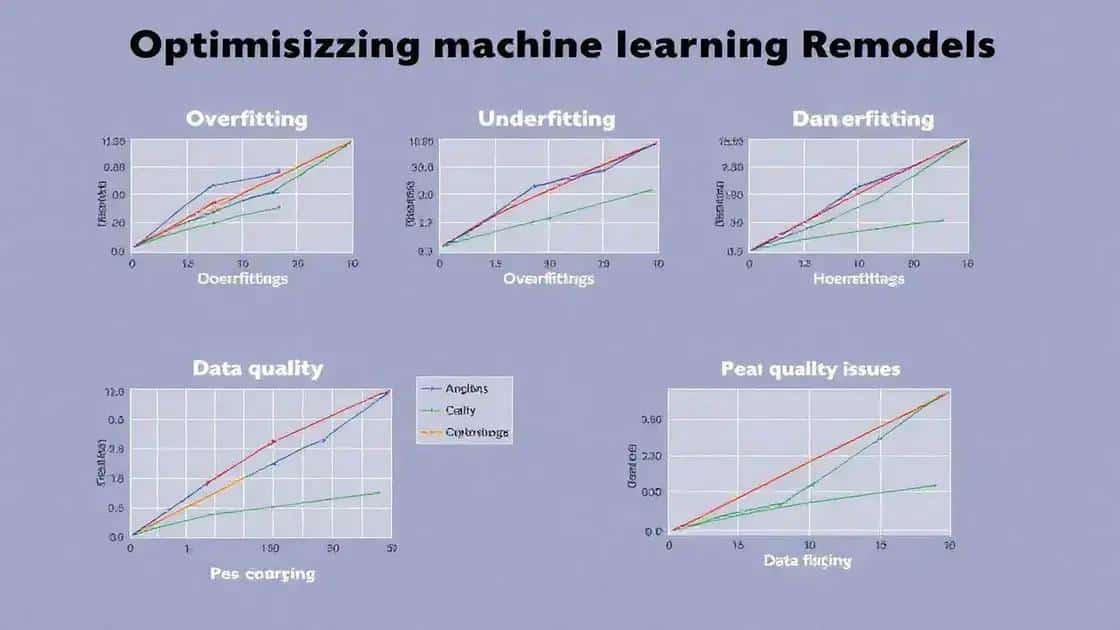

Common challenges in model optimization

Common challenges in model optimization can impact the success of machine learning projects. Navigating these obstacles is crucial to improve your model’s effectiveness.

Data Quality Issues

One major challenge involves data quality. Incomplete or inconsistent data can lead to unreliable models. Ensuring that you have a clean and well-prepared dataset is essential for successful optimization. Techniques such as data cleaning and preprocessing can help remove noise and improve model accuracy.

Overfitting and Underfitting

Overfitting and underfitting are common issues during the optimization process. Overfitting occurs when the model learns the training data too well, capturing noise rather than the underlying pattern. On the other hand, underfitting happens when the model is too simple to capture the data’s complexity. Striking the right balance between these two extremes is key.

- Regularization: Apply techniques like L1 and L2 regularization to control complexity.

- Cross-validation: Use cross-validation to ensure the model generalizes well to unseen data.

- Hyperparameter tuning: Adjust parameters to find the optimal settings.

Monitoring your model’s performance using validation datasets is crucial to avoid both issues.

Computational Costs

Optimization can also lead to high computational costs. Complex models often require extensive resources for training. This can pose a challenge for organizations with limited infrastructure. Therefore, it’s important to evaluate your resource capabilities.

Utilizing cloud computing resources can help manage these costs. By leveraging cloud services, you can access powerful hardware to support your optimization efforts, making it easier to fine-tune your models.

Model Interpretability

Another challenge in model optimization is achieving interpretability. Many complex models, such as deep learning architectures, can be seen as black boxes. This makes it hard to understand how they arrive at their predictions. Enhancing model interpretability is important to build trust in the results.

Using techniques like SHAP values or LIME can improve your ability to explain model predictions, making your optimized models more transparent to stakeholders.

Tools to assist in model optimization

Tools to assist in model optimization are essential for achieving better performance and efficiency in machine learning projects. Many software solutions can help streamline the optimization process.

Popular Libraries

Several popular libraries are available for optimizing machine learning models. These libraries provide pre-built functions that simplify complex tasks. Some notable examples include:

- Scikit-learn: A versatile library that offers a range of tools for model training and evaluation.

- Tune: An optimization library from Ray that allows for scalable hyperparameter tuning.

- Optuna: A hyperparameter optimization framework that enables efficient search and tuning.

Utilizing these libraries can save time and help ensure that your models are optimized effectively.

Cloud-Based Solutions

Cloud-based platforms also provide resources for model optimization. Services like AWS Sagemaker, Google AI Platform, and Azure Machine Learning make it easy to deploy and monitor models. These platforms offer various tools for:

- Automating the hyperparameter tuning process.

- Scaling resources up or down based on demand.

- Integrating training and optimization workflows seamlessly.

By leveraging cloud solutions, you access powerful computing resources without investing heavily in physical hardware.

Visualization Tools

Visualization tools are crucial for understanding model performance. Tools such as TensorBoard help track metrics during training. They provide insights into how models learn over time, allowing you to make informed adjustments. Visualization aids in detecting overfitting or underfitting, as you can view model loss and accuracy graphs.

Using these tools will enhance your optimization strategy, enabling you to pinpoint issues and adjust accordingly.

Automated Machine Learning (AutoML)

Automated Machine Learning (AutoML) tools simplify the optimization process by automating feature selection, model selection, and hyperparameter tuning. Platforms like H2O.ai, DataRobot, and Google Cloud AutoML make it easy for users to build and optimize models quickly, even with limited expertise.

Utilizing AutoML can significantly reduce the time spent on manual tuning and improve your model’s performance, allowing you to focus on higher-level tasks.

Best practices for successful optimization

Best practices for successful optimization of machine learning models can significantly enhance their performance. By following these guidelines, you can improve accuracy and efficiency in your projects.

Understand Your Data

First and foremost, gaining a deep understanding of your data is essential. Analyze the dataset for trends, patterns, and outliers. This knowledge allows you to choose appropriate features and preprocessing methods. Cleaning your data thoroughly ensures that the model receives high-quality input.

Start Simple

It’s advisable to start with a simple model. Simple models often perform surprisingly well and can establish a strong baseline. Once a basic model is in place, you can iteratively improve performance through optimization techniques.

Use Cross-Validation

Apply cross-validation to evaluate your model’s performance accurately. This technique divides your dataset into subsets, training the model on some while validating it on others. Cross-validation helps you understand how well your model may perform on unseen data, allowing for adjustments based on its results.

- Use k-fold cross-validation for a robust evaluation.

- Keep track of performance metrics across different folds.

- Avoid using the test set during training to ensure unbiased evaluation.

By implementing cross-validation, you can better gauge your optimization strategies.

Continuous Monitoring

Continuous monitoring of model performance after deployment is crucial. Set up a system to track key metrics over time. This practice helps identify when your model may need retraining due to data drift or other factors. By knowing when to optimize again, you can maintain performance consistently.

In addition, consider setting up automated alerts when performance drops below a certain threshold. This proactive approach ensures that potential issues are addressed promptly.

Document Everything

Finally, always document your optimization process. Recording choices made during model selection, data preparation, and feature engineering can inform future projects and help other team members understand your strategies. Clear documentation fosters collaboration and improves overall efficiency.

FAQ – Frequently Asked Questions About Model Optimization

What is model optimization?

Model optimization is the process of adjusting parameters and techniques to improve the performance and accuracy of machine learning models.

Why is understanding data important for optimization?

Understanding your data helps identify trends, clean noise, and choose relevant features, which are critical for building an effective model.

How does cross-validation help in optimization?

Cross-validation allows you to assess the model’s performance on different data subsets, ensuring that it generalizes well to new, unseen data.

What are some tools I can use for model optimization?

Popular tools include Scikit-learn for machine learning libraries, cloud platforms like AWS and Google Cloud for deployment, and visualization tools like TensorBoard for monitoring performance.